This website uses cookies

For the proper functioning and anonymous analysis of our website, we place necessary and functional cookies,

which have no consequences for your privacy.

We use more cookies, for example to make our website more relevant to you,

to make it possible to share content via social media and to show you relevant advertisements on third-party websites.

These cookies may collect data outside of our website. By clicking "Accept" By clicking you agree to the placing of these cookies.

You can find more information in our cookie policy.

Shape the future of energy trading — design and build scalable data platforms that power real-time trading decisions and accelerate the transition to sustainable energy.

Work at the intersection of data, cloud, and trading — collaborate with engineers, quants, and traders to deliver end-to-end, high-performance data solutions on Azure, Databricks, and Snowflake.

Innovate in a high-impact environment — join a cross-functional team where technical excellence, experimentation, and collaboration drive smarter, data-driven energy markets.

Why choose Eneco?

At Eneco, we’re working hard to achieve our mission: sustainable energy for everyone. Learn more about how we’re putting this into action in our One Planet Plan.

What you’ll do

As a Data Engineer in energy trading you will help shape technical decisions for our data platform, from setting up cloud infrastructure to developing data pipelines and models. You will work closely with other engineers, quants, and stakeholders to design and deliver end-to-end solutions that ensure reliable, scalable, and innovative data systems supporting trading activities.

Is this about you?

Must have:

Demonstrated expertise in designing, optimizing, and implementing large-scale data pipelines and ETL workflows, enforcing data quality, data governance and data modelling standards.

Software development best practices, including API design and development, code reviews, version control, automated testing, and CI/CD pipelines, with an interest in DevOps and SRE principles for reliable production deployments.

Our stack includes Python for data processing on Databricks, Snowflake, Kubernetes, DBT, Grafana for visualizations, and we primarily run on Azure. Proficiency in most of these tools is required, including advanced features.

Nice to Have:

Experience designing and maintaining ML model deployment pipelines, leveraging MLOps practices in Databricks to automate model versioning, testing, deployment, and monitoring at scale.

Familiar with key DS and ML processes and techniques, such as data cleaning and preprocessing, feature engineering, feature selection, model training and tuning, model evaluation, cross-validation, hyperparameter optimization, model deployment, and monitoring model performance in production environments.

Skilled in energy trading, building and optimizing data pipelines for algorithmic trading, integrating diverse market data, using standard connectivity and backtesting methods, and partnering with traders and quants to deliver robust, scalable solutions

You’ll be responsible for

- Designing and implementing data ingestion pipelines and ETL workflows for a variety of trading data sources, such as market prices, order-book information, executed trades, weather data, and renewable energy metrics.

- Designing efficient data models and information models to support portfolio management, market analytics, market modeling, and algorithmic trading initiatives. Developing and maintaining robust databases to implement and operationalize these models.

- Working alongside other engineers to design and implement comprehensive, end-to-end solutions for analysis, modelling, trading, and portfolio management, combining expertise to deliver cohesive and innovative outcomes.

- Collaborating with stakeholders and cross-functional teams to tailor and scale trading data systems, while providing technical guidance to support analytics, decision-making, and business goals.

- Ensuring all data solutions are built to be reliable, scalable, and adaptable, ready to support evolving business needs and future market developments.

This is where you’ll work

You will be working alongside Data Engineers, Machine Learning Engineers, Data Scientists, Data Analysts, as well as quants and traders. Together, your team will drive innovation in energy trading, supporting the transition to more sustainable energy sources while ensuring energy security and making the solutions economically viable. Collaboration and continuous learning are at the heart of our team. We celebrate successes, learn from failures, and work together to deliver solutions that make a real impact.

What we have to offer

Gross annual salary between €83.000 and €117.000

FlexBudget

Personal and professional growth

Hybrid working: home, office or abroad

Want more information about our terms of employment?

Work that works for you and the climate

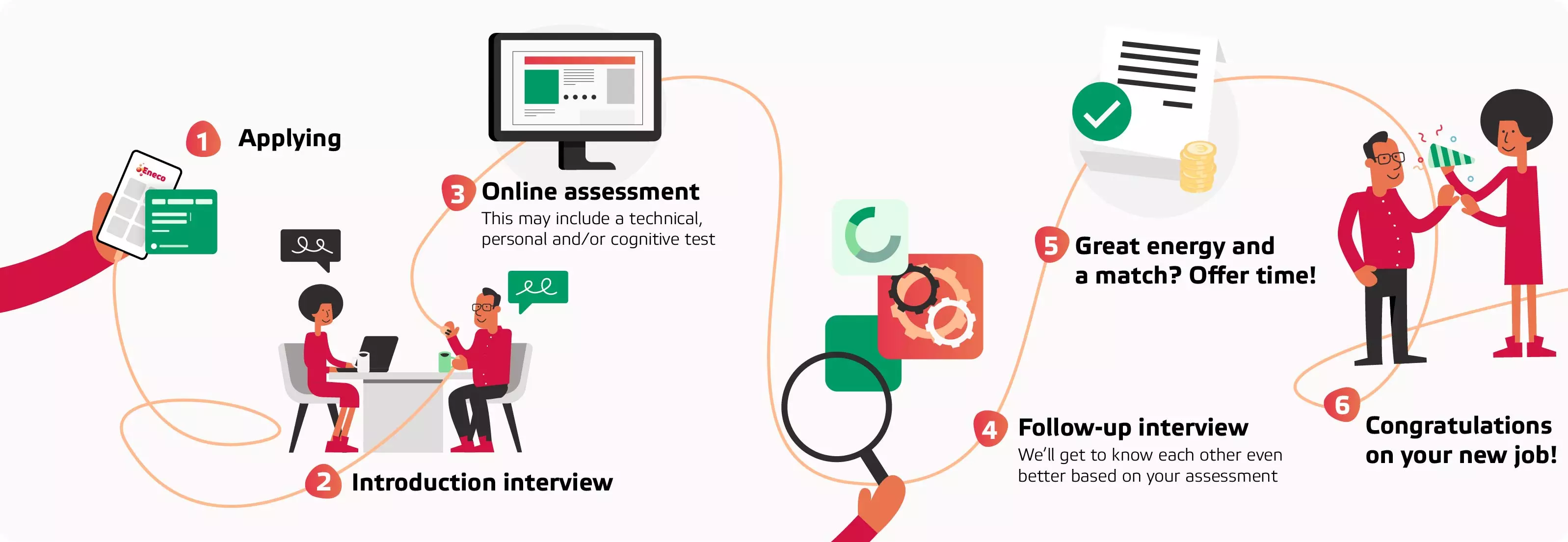

The phases of our application procedure

Want to know more about this job function?

Then please reach out to our Recruiter: [email protected]

Questions about the application procedure

Venetia de Wit

+31615850813Send an email